1 min read

Introducing a Waterless Data Center Architecture for AI Factories

What do you get when you combine the best two-phase, direct-to-chip liquid cooling with world-leading energy-efficient air treatment and climate...

4 min read

Manfreid Chua

:

Oct 14, 2024 7:36:07 AM

Manfreid Chua

:

Oct 14, 2024 7:36:07 AM

Generative Artificial Intelligence (AI) is a once-in-a-lifetime technological development, bringing to life interactive problem solving and accelerating tasks across a myriad of activities. And the amazing thing is, we have only touched the tip of the iceberg. From finding a cure to cancer to driving the development of a new generation of intelligent vehicles, AI is transforming industries, and maybe even the world. While this all seems great – and it is – this new-found marvel is thirsty, and it may be going after the drinking water that was once allocated to you and your community.

AI Factories Need Water, and Lots of It

To really bring AI to life and deliver the processing power needed for Generative AI applications such as ChatGPT, data centers have to move to the latest generation of GPUs such as NVIDIA’s H200 and GB200 and AMD’s MI300X. This migration is critical and will result in a global transition from current 10-15 megawatt data centers to 50-100 watt AI factories that can deliver the performance needed for this new generation of computing. However, an over-arching reality with these new AI factories is that they will need huge amounts of water to cool these incredibly hot chips, servers, and racks. Water is already a scarce resource across the world and a 100 megawatt data center will use 1.1 million gallons of water every day.

Up until now, the building of new data centers and the consumption of water required to run them was largely un-noticed by the average community. In fact, most people did not even realize when a data center popped up near them because they were often hidden in the space of a storefront or on a floor of a building. This is all changing with generative AI because the scale of all the processing required will demand tremendous amounts of water, land and energy!

AI Factories Are Coming to a Location Near You!

In a recent keynote, NVIDIA’s CEO Jensen Huang stated:

“The next industrial revolution has begun. Companies and countries are partnering with NVIDIA to shift the trillion-dollar traditional data centers to accelerated computing and build a new type of data center — AI factories — to produce a new commodity: artificial intelligence."

These AI factories will require significant infrastructure and are going to be located in residential and metro areas. They will also be tapping the water supply that was once mainly for human consumption. A perfect example is in the City of Colón, a municipality in Central Mexico that is home to Microsoft’s first hyperscale data center campus in the country. According to news reports, this town of 67,000 is suffering extreme drought. Its two dams have nearly dried up, farmers are struggling with dead crops, and families are relying on trucked and bottled water to fulfill their daily needs. It also happens to be home to three massive data centers run by Google, Microsoft and Amazon.

We’re also seeing the same problem in other countries. In the US, there is a data center in a suburb of Iowa called Altoona where they're using up to about one-fifth of the water that the city is using. Considering that Iowa is in the midst of one of its most prolonged droughts in decades, this is a challenging situation for the local communities.

How Much Water Does AI Need?

According to Forbes, AI's projected water usage could hit 6.6 billion m³ by 2027. That is a lot of water, which is why the University of California conducted its own research to determine how much water AI actually consumes. They found that training a ChatGPT-3 model in Microsoft’s high-end data centers can directly evaporate 700,000 liters, or about 185,000 gallons, of water. They also found that GPT-3 needs to "drink" a 16-ounce bottle of water for roughly every 10-50 responses it makes. Imagine how much water will be consumed when this model is fielding billions of queries?

Carbon emissions reduction has of course had tremendous focus in the realm of data center design and operations. Reducing water consumption and usage is now the next challenge we must undertake especially as we ramp this new class of data center: AI Factories.

The Emergence of Waterless AI Factories

With AI factories being 5-10 times more dense than traditional data centers, there is no other way to cool AI silicon and remove this amount of heat without liquid cooling. However, let’s take a special look at two-phase, direct-to-chip liquid cooling, which can enable a path to true waterless AI factories. This technology is a game changer because it uses no water, takes up little real estate, requires much less power, and allows ways to recapture energy from the heat that is generated from the AI infrastructure. This energy can be used to heat nearby buildings or even power additional equipment in the data center.

ZutaCore®’s two-phase, direct-to-chip technology (known as HyperCool®) has been proven to cool the hottest AI accelerators (GPUs) of 2,800 watts and beyond at a PUE as low as 1.05 while also increasing compute density in the rack. This delivers 10-20% better energy efficiency with dynamic cooling, smaller pumps, and no performance degradation over time. In this closed-loop system, the heat transfer fluid does not need to be replaced and poses none of the risks that water presents in terms of leakage or corrosion. The system allows for higher server densification needed in AI factories for system interconnects to lower latency, consuming up to 50% less space than air-cooled data centers and up to 75% less space than immersion cooling.

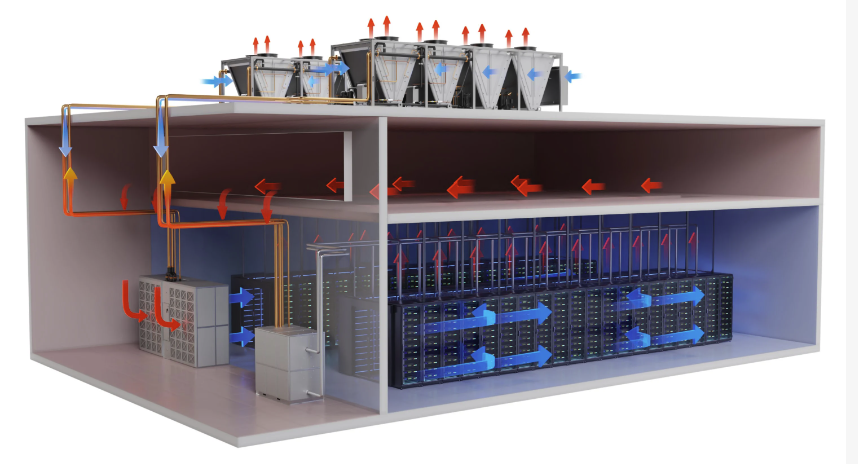

A real-life example can be found in the recent announcement between ZutaCore and Munters, a global leader in energy-efficient air treatment and climate solutions, to deliver a waterless data center architecture for AI factories. As part of their partnership, Munters has integrated ZutaCore HyperCool at the server and rack level with the Munters closed-loop system that provides the ability to remove heat from the data center without a facility water loop. Munters’ SyCool uses a refrigerant-based thermosiphon loop, extracting the heat dissipated by HyperCool at each rack, and transporting the heat up risers to modular condensers on the roof where the heat is rejected to ambient. The heat can alternatively be reused for other applications, such as heating adjacent facilities, etc. Watch this video explaining this solution.

Sustainability and AI Can Go Hand in Hand

As highlighted above with Munters and ZutaCore, it is possible to bring AI to the masses in a sustainable and cost effective way. Data centers don’t need to drink the world’s water supply to enable ChatGPT-like features and convenience. Still, they need to leverage technologies such as two-phase direct-to-chip liquid cooling to ensure that happens.

So the next time you see or hear about a data center or AI factory coming to a location near you, ask about their water usage. If it’s thirsty for water, let’s hope the designers are thinking about water usage reduction strategies and considering the options that help reduce the amount of water needed in the AI Factory. Water is, after all, for people…not servers..

To learn more about HyperCool and how it drives the future of AI sustainability, download this eBook.

1 min read

What do you get when you combine the best two-phase, direct-to-chip liquid cooling with world-leading energy-efficient air treatment and climate...

.jpg)

3 min read

The infrastructure build out of AI is nothing like the world has ever experienced. With a blink of an eye, we’re seeing the required computing power...

1 min read

As AI superchips push the boundaries of power and performance, the heat they generate is becoming a critical challenge for data centers. With...